what object connects the database to the dataset object?

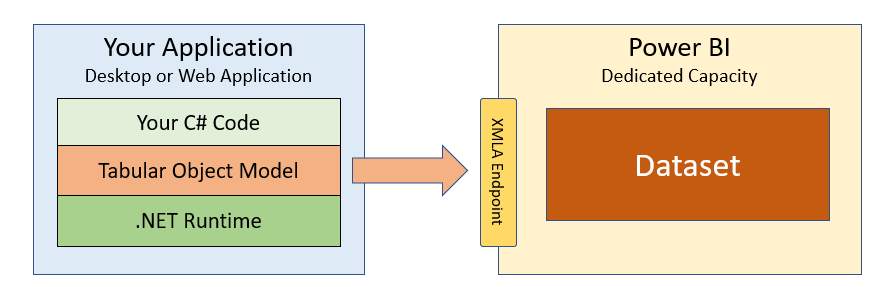

The XMLA Endpoint for Power BI Premium datasets reached general availability in Jan 2021. The XMLA endpoint is pregnant to developers because information technology provides new APIs to collaborate with the Analysis Services engine running in the Power BI Service and to directly plan against Power BI datasets. A growing number of Power BI professionals have found that they can create, view and manage Power BI datasets using pre-exisitng tools that use the XMLA protocol such as SQL Server Management Studio and the Tabular Editor. As a .Cyberspace developer, at that place's great news in that y'all can now write C# code in a .NET application to create and modify datasets directly in the Ability BI Service.

The Tabular Object Model (TOM) is a .Internet library that provides an abstract layer on top of the XMLA endpoint. It allows developers to write code in terms of a intuitive programming model that includes classes like Model, Table, Column and Measure. Behind the scenes, TOM translates the read and write operations in your code into HTTP requests executed against the XMLA endpoint.

The focus of this article is getting started with TOM and demonstrating how to write the C# code required to create and alter datasets while they're running in the Power BI Service. Yet, you should notation that TOM tin can also be used in scenarios that exercise non involve the XMLA endpoint such as when programming against a local dataset running in Power BI Desktop. You can read through Phil Seamark's blog series and watch the video from the Ability BI Dev Campsite titled How to Program Datasets using the Tabular Object Model (TOM) to learn more about using TOM with Ability BI Desktop.

TOM represents a new and powerful API for Power BI developers that is separate and singled-out from the Power BI REST APIs. While at that place is some overlap between these ii APIs, each of these APIs includes a pregnant amount of functionality not included in the other. Furthermore, in that location are scenarios that require a developer to utilise both APIs together to implement a full solution.

Getting Started with the Tabular Object Model

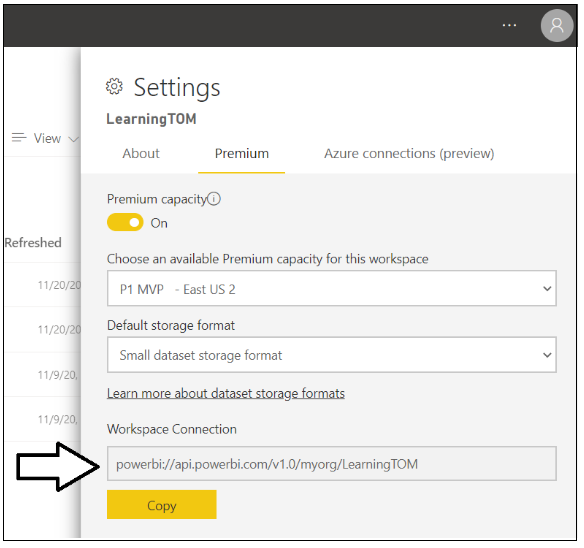

The first thing y'all need to get earlier you can plan with TOM is the URL for a workspace connectedness. The workspace connection URL references a specific workspace and is used to create a connection string that allows your code to connect to that Power BI workspace and the datasets running inside. Start by navigating to the Settings page of a Ability BI workspace running in a dedicated capacity.

Remember the XMLA endpoint is just supported for datasets running in a dedicated capacity. The XML endpoint is non avialble for datasets running in the shared chapters. If you are working with datasets in a Power BI Premium per User chapters, you can connect equally a user but you cannot connect equally a service primary.

Once you navigate to the Premium tab of the Settings pane, you lot tin copy the Workspace Connection URL to the clipboard.

The adjacent pace is to create a new .Internet application in which you will write the C# lawmaking that programs using TOM. Yous can create a Spider web awarding or a Desktop awarding using .Cyberspace 5, .Net Cadre 3.1 or older versions on the .NET Framework. In this article we will create a unproblematic C# console application using the .NET 5 SDK.

Begin past using the .NET CLI to create a new panel application.

dotnet new console --proper name The next matter you need to do is to add together the NuGet package which contains the Tabular Object Model (TOM). The name of this NuGet package is Microsoft.AnalysisServices.AdomdClient.NetCore.retail.amd64 and you can install this parcel in a .Internet 5 awarding using the following .NET CLI command.

dotnet add together parcel Microsoft.AnalysisServices.AdomdClient.NetCore.retail.amd64 Once your project has the NuGet package for the TOM library installed, y'all can write the traditional Hello World awarding with TOM that connects to a Power BI workspace using the Workspace Connection URL and then enumerates through the datasets in the workspace and displays their names in the console window.

using Arrangement; using Microsoft.AnalysisServices.Tabular; class Plan { static void Master() { // create the connect string string workspaceConnection = "powerbi://api.powerbi.com/v1.0/myorg/LearningTOM"; tring connectString = $"DataSource={workspaceConnection};"; // connect to the Ability BI workspace referenced in connect string Server server = new Server(); server.Connect(connectString); // enumerate through datasets in workspace to brandish thier names foreach (Database database in server.Databases) { Console.WriteLine(database.Proper name); } } } Note that the connect string in this example contains the Workspace Connection URL but no data nearly the user. If you lot run the console awarding with this lawmaking, the awarding will begin to run then you'll exist prompted with a browser-based window to login in. If you log in with a user account that has permissions to access the workspace referenced by the Workspace Connectedness URL, the TOM library is able to learn an access token, connect to the Power BI Service and enumerate through the datasets in the workspace.

For development and testing scenarios where security is non every bit critical, you can hard-lawmaking your user proper name and countersign into the code to eliminate the demand to log in interactively each time yous run a program to test your code.

string workspaceConnection = "powerbi://api.powerbi.com/v1.0/myorg/YOUR_WORKSPACE"; string userId = "YOUR_USER_NAME"; string password = "YOUR_USER_PASSWORD"; string connectStringUser = $"DataSource={workspaceConnection};User ID={userId};Password={countersign};"; server.Connect(connectStringUser); Note that it is not only possible only likewise quite easy to authenticate every bit the service primary instead of as a user. If you accept created an Azure Advert application with an Awarding ID and an application secret, you tin can authenticate your code to run equally the service principal for the Azure Advertizement application using the following code.

string workspaceConnection = "powerbi://api.powerbi.com/v1.0/myorg/YOUR_WORKSPACE"; string tenantId = "YOUR_TENANT_ID"; string appId = "YOUR_APP_ID"; tring appSecret = "YOUR_APP_SECRET"; string connectStringApp = $"DataSource={workspaceConnection};User ID=app:{appId}@{tenantId};Password={appSecret};"; server.Connect(connectStringApp); In club program with TOM and access a dataset as a service chief, you must configure a tenant-level Power BI setting in the Power BI admin portal. The steps for configuring Power BI to support connecting as a service principal are covered in Embed Ability BI content with service chief and an awarding cloak-and-dagger.

TOM also provides the flexibility of establishing a connection using a valid Azure Ad access token. If you accept the developer skills to implement an authentiation flow with Azure Advertizement and acquire access tokens, y'all can format your TOM connection string without a user name only instead to include the access token as the password.

public static void ConnectToPowerBIAsUser() { string workspaceConnection = "powerbi://api.powerbi.com/v1.0/myorg/YOUR_WORKSPACE"; string accessToken = TokenManager.GetAccessToken(); // you lot must implement GetAccessToken yourself cord connectStringUser = $"DataSource={workspaceConnection};Password={accessToken};"; server.Connect(connectStringUser); } If y'all are acquiring a user-based access token to connect to a Power BI workspace with TOM, make sure to request the following delegated permissions when acquiring access token to ensure you lot accept all the authoring permissions you need.

public static readonly string[] XmlaScopes = new string[] { "https://assay.windows.internet/powerbi/api/Content.Create", "https://assay.windows.internet/powerbi/api/Dataset.ReadWrite.All", "https://analysis.windows.cyberspace/powerbi/api/Workspace.ReadWrite.All", }; If you lot've been programming with the Power BI Residual API, you might recognize familiar permissions such equally Content.Create, Dataset.ReadWrite.All and Workspace.ReadWrite.All. An interesting observation is that TOM uses the aforementioned gear up of delegated permissions equally the Power BI Residual API defined inside the telescopic of the Azure Advertisement resource ID of https://analysis.windows.internet/powerbi/api.

The fact that both the XMLA endpoint and the Ability BI Rest API share the same set of delegated permissions has its benefits. Access tokens can be used interchangeably between TOM and the Power BI REST API. One time you lot accept caused an access token to phone call into TOM to create a new dataset, you tin can use the exact same admission token to call the Power BI REST API to set the datasource credentials which is discussed later in this article.

I thing that tends to confuse Power BI programmers is that is that service principals practise not use delegated permissions. Instead, when programming with TOM you configure access for a service chief by adding information technology to the target workspace as a fellow member in the part of Admin or Fellow member.

Understanding Servers, Datasets and Models

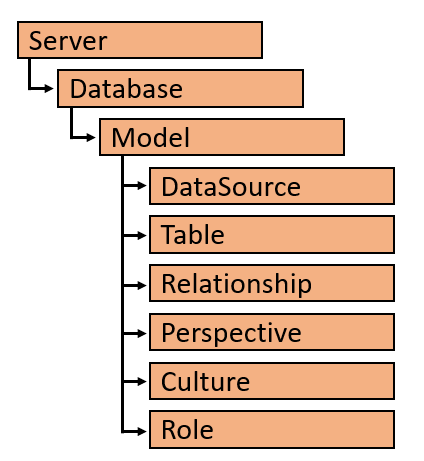

The object model in TOM is based on a hierarchy with top-level Server object which contains a drove of Database objects. When programing with TOM in Power BI, the Server object represents a Power BI workspace and the Database object represents a Power BI dataset.

Each Database contains a Model object which provides read/write access to the information model associated with a Power BI dataset. The Model contains collections for the elements of a information model including DataSource, Table, Relationship, Perspective, Culture and Part.

Equally shown in the Hullo World code listing, once yous telephone call server.Connect, you tin can hands discover what datasets exist within a Power BI workspace past enumerating through the Databases collection of the Server object.

foreach (Database database in server.Databases) { Console.WriteLine(database.Proper noun); } You can also use the GetByName method exposed by the Databases collection object to access a dataset by its name.

Database database = server.Databases.GetByName("Wingtip Sales"); Information technology is of import to distinguish between a Database object and its inner Model property. Y'all can use Database object properties to find dataset attributes such as Proper name, ID, CompatibilityMode and CompatibilityLevel. There is as well an EstimatedSize property which makes it possible to find how large a dataset has grown. Other significant properties include LastUpdate, LastProcessed and LastSchemaUpdate which allow you to determine when the underlying dataset was last refreshed and when the dataset schema was lasted updated.

public static void GetDatabaseInfo(string DatabaseName) { Database database = server.Databases.GetByName(DatabaseName); Console.WriteLine("Name: " + database.Name); Panel.WriteLine("ID: " + database.ID); Console.WriteLine("CompatibilityMode: " + database.CompatibilityMode); Console.WriteLine("CompatibilityLevel: " + database.CompatibilityLevel); Console.WriteLine("EstimatedSize: " + database.EstimatedSize); Console.WriteLine("LastUpdated: " + database.LastUpdate); Console.WriteLine("LastProcessed: " + database.LastProcessed); Console.WriteLine("LastSchemaUpdate: " + database.LastSchemaUpdate); } While the Database object has its ain backdrop, it is the inner Model object of a Database object that provides you with the ability to read and write to a dataset'southward underlying data model. Here is a elementary case of programming the database Model object to enumerate through its Tables collection and discover what tables are within.

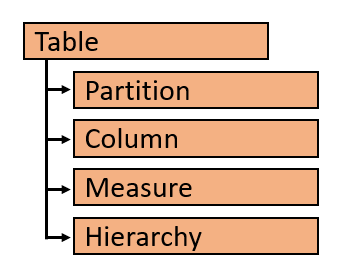

In the TOM object model, each Table object has collections objects for its partitions. columns, measures and hierarchies.

Once you have retrieved the Model object for a Database, you tin can access a specific table by name in the model using the Observe method of the Tables collection. Hither is an example retrieving a table named Sales and discovering its members by enumerating through its Columns collection and its Measures collection.

Model databaseModel = server.Databases.GetByName("Tom Demo").Model; Table tableSales = databaseModel.Tables.Find("Sales"); foreach (Cavalcade column in tableSales.Columns) { Console.WriteLine("Coulumn: " + column.Name); } foreach (Measure out measure in tableSales.Measures) { Console.WriteLine("Measure: " + measure.Name); Console.WriteLine(measure.Expression); } Modifying Datasets using TOM

Upwards to this point, you have seen how to access a Database object and its inner Model object to inspect the data model of a dataset running in the Ability BI Service. Now it's time to program our starting time dataset update using TOM by adding a measure to a tabular array.

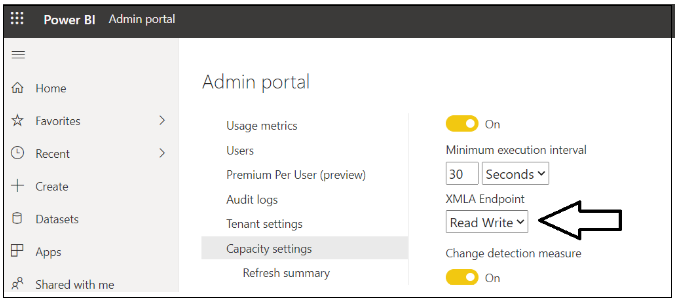

Remember that the XMLA endpoint you are using must be configured for read/write access. By default, the XMLA endpoint permission setting is ready to Read, so it must be explicitly gear up to Read Write past someone with Capacity Admin permissions. This setting can be viewed and updated in the Capacity settings page in the Admin portal.

Once the XMLA endpoint has been configured with read/write admission, y'all tin write the following code to add together a new measure named Sales Revenue to the Sales table.

Model dataset = server.Databases.GetByName("Tom Demo Starter").Model; Tabular array tableSales = dataset.Tables.Find("Sales"); Measure salesRevenue = new Measure out(); salesRevenue.Name = "Sales Revenue"; salesRevenue.Expression = "SUM(Sales[SalesAmount])"; salesRevenue.FormatString = "$#,##0.00"; tableSales.Measures.Add(salesRevenue); dataset.SaveChanges(); Let's walk through this lawmaking. First, you lot create a new Measure object using the C# new operator and provide values for the Name, Expression and a FormatString. Second, you add the new Measure object to the Measures drove of the target Tabular array object by calling the Add method. Finally, telephone call the SaveChanges method of the Model object to write your changes back to the dataset in the Power BI Service.

Keep in mind that updates to a dataset are batched in retentivity until you call SaveChanges. Imagine a scenario where you'd like to hide every column in a table. You tin first by writing a foreach loop to enumerate through all the Column objects for a table and setting the IsHidden property for each Column object to true. Later the foreach loop completes, you lot have several column updates that are batched in memory. Information technology's the final telephone call to SaveChanges that push all the changes back to the Ability BI Service in a batch.

Model dataset = server.Databases.GetByName("Tom Demo").Model; Tabular array tableSales = dataset.Tables.Find("Sales"); foreach (Column column in tableSales.Columns) { cavalcade.IsHidden = true; } dataset.SaveChanges(); Let's say yous want to update the FormatString holding for an existing column. The Columns collection exposed a Find method to call back the target Cavalcade object. After that, it's just a matter of setting the FormatString property and calling SaveChanges.

Model dataset = server.Databases.GetByName("Tom Demo").Model; Table tableSales = dataset.Tables.Notice("Products"); Cavalcade columnListPrice = tableSales.Columns.Find("List Price"); columnListPrice.FormatString = "$#,##0.00"; dataset.SaveChanges(); TOM'southward ability to dynamically discover what'south within a dataset provides opportunities to perform updates in a generic and sweeping fashion. Imagine a scenario in which y'all are managing a dataset which has lots of tables and 10s or 100s of columns based on the DateTime datatype. Y'all tin can update the FormatString property for every DataTime column in the unabridged dataset at once using the following code.

Database database = server.Databases.GetByName("Tom Demo Starter"); Model datasetModel = database.Model; foreach (Table table in datasetModel.Tables) { foreach (Column column in table.Columns) { if(cavalcade.DataType == DataType.DateTime) { cavalcade.FormatString = "yyyy-MM-dd"; } } } datasetModel.SaveChanges(); Refreshing Datasets using TOM

At present allow's perform a typical dataset maintenance operation. Every bit y'all can see, it's not very complicated to first a dataset refresh functioning using TOM.

public static void RefreshDatabaseModel(string Proper name) { Database database = server.Databases.GetByName(Name); database.Model.RequestRefresh(RefreshType.DataOnly); database.Model.SaveChanges(); } One strange matter to call out is that dataset refresh operations initiated through the XMLA endpoint do non show upward in the Power BI Service. For example, imagine you lot start a refresh operation with TOM and then you navigate to the Power BI Service and you audit the dataset refresh history. Y'all will not see whatever refresh operations initiated by TOM. Still, when you lot inspect the data in the dataset you will encounter that the refresh operation did really take identify.

Note that while TOM provides the ability to begin a refresh operation, information technology does not provide whatever capabilities to set datasource credentials for a Ability BI dataset. In order to refresh datasets with TOM, you must beginning set the datasource credentials for the dataset using another technique such as setting datasource credentials by hand in the Ability BI Service or setting datasource credentials with code using the Power BI Rest APIs.

Creating and Cloning Datasets

Imagine you have a requirement to create and clone Ability BI datasets using code written in C#. Let'due south begin by writing a reusable role named CreateDatabase that creates a new Database object.

public static Database CreateDatabase(cord DatabaseName) { string newDatabaseName = server.Databases.GetNewName(DatabaseName); var database = new Database() { Name = newDatabaseName, ID = newDatabaseName, CompatibilityLevel = 1520, StorageEngineUsed = Microsoft.AnalysisServices.StorageEngineUsed.TabularMetadata, Model = new Model() { Name = DatabaseName + "-Model", Clarification = "A Demo Tabular data model with 1520 compatibility level." } }; server.Databases.Add(database); database.Update(Microsoft.AnalysisServices.UpdateOptions.ExpandFull); return database; } In this example we will start by using the GetNewName method of the Databases collection object to ensure our new dataset name is unique within the target workspace. After that, the Datasbase object and its inner Model object can be created using the C# new operator every bit shown in the following lawmaking. At the terminate, this method adds the new Database object to the Databases collection and calls the database.Update method.

If your goal is to copy an existing dataset instead of creating a new one, you tin use the post-obit CopyDatabase method to clone a Power BI dataset past creating a new empty dataset and then calling CopyTo on the Model object for the source dataset to re-create the entire data model into the newly created dataset.

public static Database CopyDatabase(string sourceDatabaseName, cord DatabaseName) { Database sourceDatabase = server.Databases.GetByName(sourceDatabaseName); string newDatabaseName = server.Databases.GetNewName(DatabaseName); Database targetDatabase = CreateDatabase(newDatabaseName); sourceDatabase.Model.CopyTo(targetDatabase.Model); targetDatabase.Model.SaveChanges(); targetDatabase.Model.RequestRefresh(RefreshType.Full); targetDatabase.Model.SaveChanges(); return targetDatabase; } Creating a Real-World Dataset from Scratch

OK, now imagine you take just created a new dataset from scratch and at present you need to utilize TOM to compose a existent-world data model by adding tables, columns, measures, hierarchies and table relationships. Permit's run into an example of creating a new table with code that includes defining columns, adding a three-level dimensional bureaucracy and even supplying the Yard lawmaking for the underlying table query.

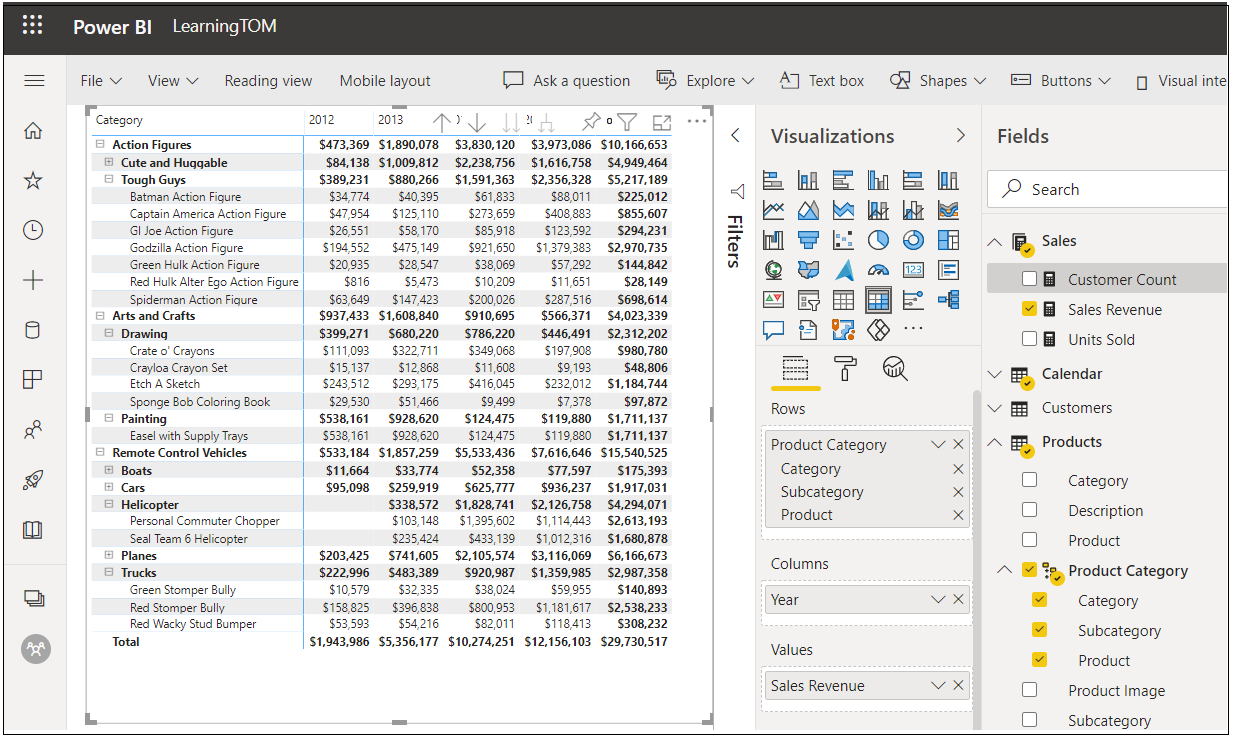

private static Tabular array CreateProductsTable() { Table productsTable = new Table() { Proper noun = "Products", Description = "Products table", Partitions = { new Sectionalisation() { Proper name = "All Products", Fashion = ModeType.Import, Source = new MPartitionSource() { // M code for query maintained in carve up source file Expression = Backdrop.Resources.ProductQuery_m } } }, Columns = { new DataColumn() { Proper name = "ProductId", DataType = DataType.Int64, SourceColumn = "ProductId", IsHidden = true }, new DataColumn() { Proper noun = "Product", DataType = DataType.String, SourceColumn = "Product" }, new DataColumn() { Name = "Description", DataType = DataType.String, SourceColumn = "Description" }, new DataColumn() { Proper noun = "Category", DataType = DataType.String, SourceColumn = "Category" }, new DataColumn() { Name = "Subcategory", DataType = DataType.String, SourceColumn = "Subcategory" }, new DataColumn() { Name = "Production Image", DataType = DataType.Cord, SourceColumn = "ProductImageUrl", DataCategory = "ImageUrl" } } }; productsTable.Hierarchies.Add together( new Bureaucracy() { Proper name = "Product Category", Levels = { new Level() { Ordinal=0, Name="Category", Column=productsTable.Columns["Category"] }, new Level() { Ordinal=1, Name="Subcategory", Column=productsTable.Columns["Subcategory"] }, new Level() { Ordinal=2, Name="Product", Column=productsTable.Columns["Production"] } } }); return productsTable; } Once you accept created a set of helper methods to create the tables, you tin can compose them together to create a data model.

Model model = database.Model; Table tableCustomers = CreateCustomersTable(); Tabular array tableProducts = CreateProductsTable(); Tabular array tableSales = CreateSalesTable(); Table tableCalendar = CreateCalendarTable(); model.Tables.Add(tableCustomers); model.Tables.Add(tableProducts); model.Tables.Add(tableSales); model.Tables.Add(tableCalendar); TOM exposes a Relationships collection on the Model object which allows you to ascertain the relationships betwixt the tables in your model. Hither'southward the code required to create a SingleColumnRelationship object which establishes a ane-to-many relationship between the Products table and the Sales table.

model.Relationships.Add(new SingleColumnRelationship { Proper name = "Products to Sales", ToColumn = tableProducts.Columns["ProductId"], ToCardinality = RelationshipEndCardinality.One, FromColumn = tableSales.Columns["ProductId"], FromCardinality = RelationshipEndCardinality.Many }); Afterwards yous are done adding the tables and table relationship you can save your work with a call to model.SaveChanges.

model.SaveChanges(); At this point, afterwards calling SaveChanges, you should be able to see the new dataset created in the Power BI Service and begin using it to create new reports.

Remember that you will demand to set the datasource credentials past mitt or through the Power BI Residue API earlier you tin refresh the dataset.

The sample project with the C# code you lot've seen in this article is available hither. Now information technology'south time for you to start programming with TOM and to find ways to leverage this powerful new API in the evolution of custom solutons for Ability BI.

oxenhamwhateening.blogspot.com

Source: https://www.powerbidevcamp.net/articles/programming-datasets-with-TOM/